At the heart of the dilemma about what to do about the plague of hateful and harassing comments online, are questions of free speech, local laws and who should decide what can be said by whom.

Historically, internet companies have benefited from well established safe harbors from liability for the speech of their users, an approach that has helped enable the Web to become the creative and impactful environment it is today. However, hate speech and harassment have flourished online, and efforts by global platforms like Facebook, YouTube and Twitter to respond have been inconsistent and largely ineffective.

Germany (with a population of nearly 83 million people) recently thrust itself into the global spotlight on this question, implementing a law in 2018 intended to reduce hate speech and defamation online. The law introduces steep fines for popular social media companies if they do not take down manifestly unlawful content within 24 hours of a notification, and other unlawful content within up to seven days.

The Network Enforcement Act (NetzDG) was praised by some politicians as an important measure to curb hate speech and vehemently opposed by others. It was widely criticized by digital rights groups concerned about threats to free speech and overbroad takedowns. From abroad, it was observed with glee by governments who limit free speech. Russia, Venezuela and Kenya are among countries who quickly designed their own versions of the law.

In Germany, one year after implementation, the new law seems to be neither particularly effective at solving what it set out to do, nor as restrictive as many feared. However, without more insight into the kinds of notices that are being sent and the methods and guidelines platforms have adopted to handle them, it’s difficult to assess the real impact.

NetzDG was designed to put the onus on companies to moderate content and remove it quickly. Germany’s Federal Office of Justice can fine companies up to 50 million Euros ($56.3 million USD) if platforms fail to comply with valid removal requests by users or authorities. After the law was passed, Facebook and Twitter said they hired additional moderators in Germany to review content flagged as problematic by users or algorithms.

To comply with the law, Facebook, Google+, YouTube and Twitter each published reports in July 2018 and December 2018 detailing how they enabled users to file complaints and how they dealt with those complaints. So far, the number of content takedowns reported by platforms appears low compared to the number of complaints received.

Twitter, for example, said they received 256,462 complaints between July and December 2018 and took action on just 9%. Facebook said they saw 1,048 complaints and took down just 35.2% of reported content. What these complaints were about, or why so many were rejected, is unknown. Independent researchers have no access to raw data, and there is no standardized reporting process between platforms. The numbers are open to interpretation from every angle.

“If we want to better understand how companies make decisions about acceptable and unacceptable speech online, we need a more granular understanding of case-by-case determinations,” wrote researchers from Germany’s Alexander von Humboldt Institut für Internet und Gesellschaft in reaction to the reports. They call for greater transparency and insight in order to understand what the effect of the law has been: “Who are the requesters for takedowns, and how strategic are their uses of reporting systems? How do flagging mechanisms affect user behavior?”

While most platform content rules are understood to be based on terms of service, community guidelines and other user policies, relatively little is communicated directly by platforms about how they enforce their own rules on prohibited content.

In Germany, an opportunity to come out of a contentious and politicized debate about harmful content with greater knowledge and better solutions has so far not materialized. Greater transparency around the sources of hateful and violent speech online, who reports it and how takedowns are approached by intermediaries would be an important step toward understanding how to foster a healthier internet for all.

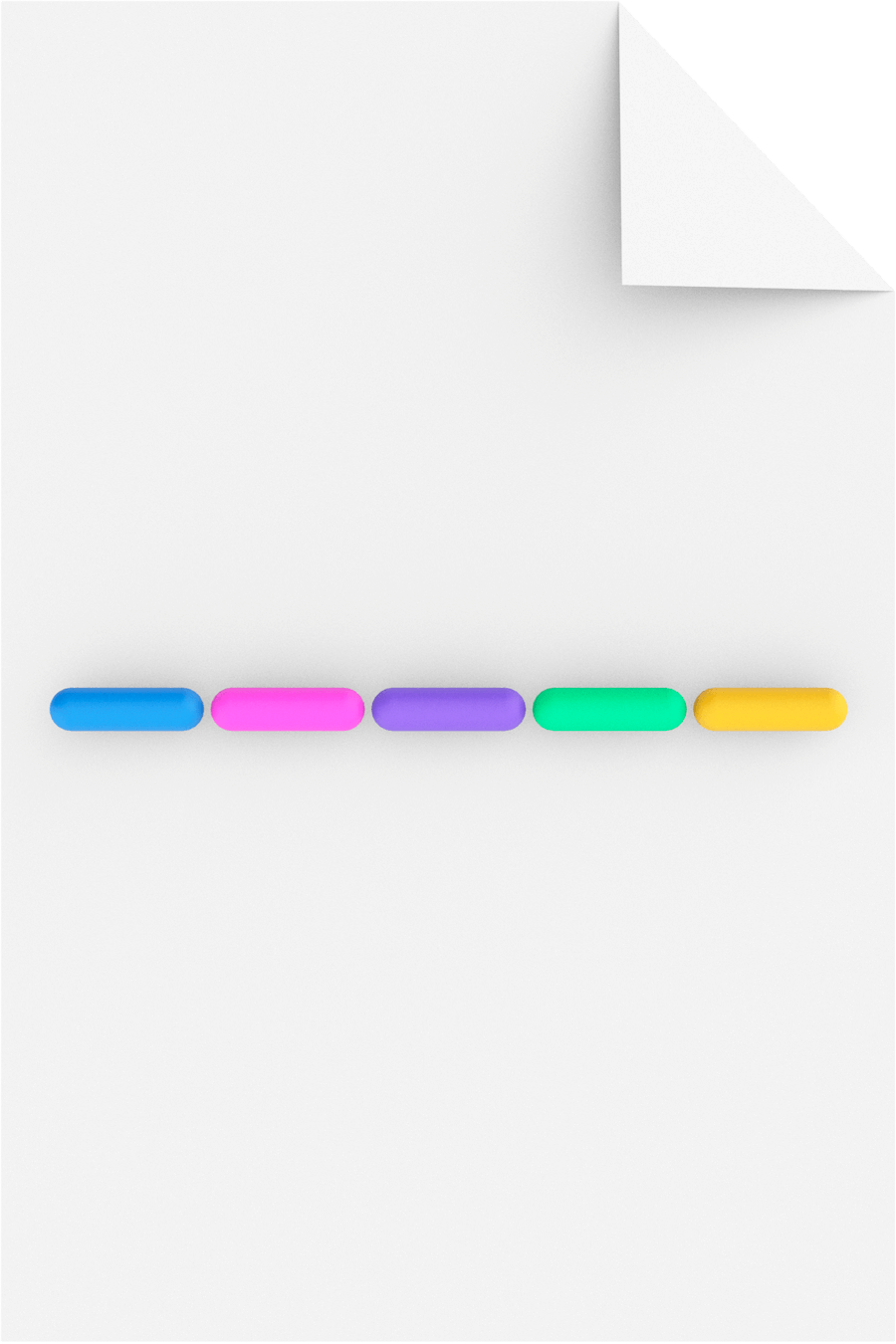

Removals of content under the Network Enforcement Law in Germany

What the platforms reported from July to December 2018

NetzDG Transparency Reports by Facebook, Google and Twitter for July – December 2018. Table inspired by Removals of online hate speech in numbers by Kirsten Gollatz, Martin J. Riedl and Jens Pohlmann. In: Digital Society Blog, 2018

What do you think is the best solution to curbing hateful speech online?

The NetzDG actually used to supress oponions from the political right in germany. This is censorship and it getting more worse day after day. Saying something which is different from the political mainstream, can be harmful in germany. If your influence is big enough, the wrong oponion can be harmful. A group called the Antifa threatens everyone who does not speak out the right things. They beat you up or burning your car, just for saying something which they called as ,,rechts‘‘. Rechts is evil connotated in germany an this became a buzzword which only means: wrong political oponion. Its hard to say that and it hearts me, but iam a german and see all these shit. Ten years ago this country was complete an other world, much more free, secure and friendly.

It seems like Germany didn't learn its lesson from WW2. The Weimar Republic tired to suppress Hateful Speech. All it did was give Hitler a bigger platform and made him a martyr to people who follow him. These people seem think if you can stop hateful speech. That hateful ideas just magically disappear. That naive and simplistic view of how the world works.

As long as the platforms are classic companies and the platform is already cleared of, for example, police or state-critical content, it is only reasonable to expect that hatred against marginalized groups will be fought within a reasonable time with the means of the rule of law. If you want a truly censorship-free decentralized network, that censorship is indeed free and anonymous, the software must be designed differently. In such a case, however, users should in any case be able to decide which content will spread and which will not, as is the case with freenet, for example.

Free speech/association and government mandate to not allow certain speech to be published are not compatible. Germany has decided to embrace its authoritarian roots, it just remains to be seen whether this kind of censorship is used as justification for legal suppression in any areas where less "progressive" constituencies take power.

Self discipline when posting on the net. No amount of laws can solve this problem.

Preferably, each person must be the ultimate responsible for what it says, not the platforms. If people are validated and can be punished for their words, then platforms don't need to make judgements. Nowadays many platforms already censor comments just because they are not politically aligned and this is censorship.